Now this era of GPUs and AI and ML. Because this very secure sector for high paying jobs, I am totally sure have you most of the ads like on tv, news, YouTube about AI and ML so in this blog you will be able to understand clearly about AI and ML one of the best use-cases in GPU’s

Table of Contents

What are GPUs

A GPU, or Graphics Processing Unit, originally was designed to accelerate the rendering of 3D graphics and images in computer games. Unlike a Central Processing Unit (CPU), which is optimized for sequential task processing and handles tasks like system control and data processing, a GPU is optimized for parallel processing. Due to this inherent difference, GPUs are equipped with thousands of cores that can handle multiple tasks simultaneously.

Why GPUs are Important in AI

- Parallel Processing Capabilities: Artificial Intelligence, particularly Deep Learning, involves the processing of vast amounts of data. Neural networks, which are a cornerstone of many AI models, involve numerous operations that can be parallelized. A GPU’s multi-core architecture is incredibly suited for this.

- Higher Bandwidth Memory: GPUs come with high bandwidth memory like GDDR6 or HBM2, which allows faster data transfers. In the domain of AI, where large datasets and big models are common, this faster memory is crucial.

- Speed: Training a deep learning model can take days to weeks with standard CPUs. GPUs can significantly cut down this time, making experimentation and model tuning feasible.

- Libraries and Frameworks: Due to the rise in the popularity of GPUs for AI tasks, there has been robust growth in the development of libraries and frameworks optimized for GPU computations. Tools like TensorFlow, PyTorch, and CUDA have made it easier to leverage GPU power for AI.

Key Usage Areas of GPUs in AI and ML

- Deep Learning Training: This is one of the most computationally intensive aspects of AI. Whether it’s image classification using Convolutional Neural Networks (CNNs) or natural language processing with Transformers, GPUs play a crucial role in reducing training times.

- Inference: Once AI models are trained, they need to be deployed to make predictions. While inference doesn’t always require as much computational power as training, real-time applications or those that need to process large volumes of data can greatly benefit from GPUs.

- Simulations: Reinforcement learning, an AI technique that learns optimal actions through trial and error, often requires simulations. GPUs accelerate these simulations, allowing agents to learn faster.

- Data Processing and Augmentation: Preprocessing data is a crucial step before feeding it into AI models. Tasks like image augmentation, data normalization, and other transformation techniques can be parallelized and thus accelerated using GPUs.

- Research and Experimentation: Researchers and scientists working on state-of-the-art AI techniques often require the raw power and speed provided by GPUs to test new algorithms, architectures, and methods.

The Future

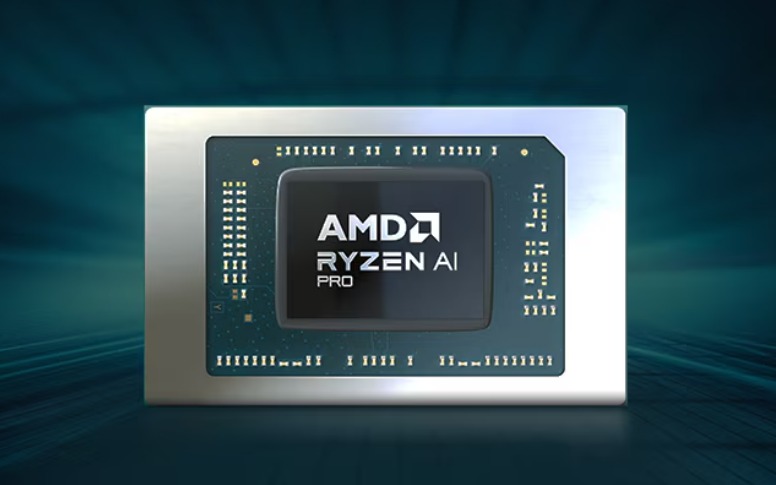

As AI models grow in size and complexity, the demand for more powerful computational tools also rises. The emergence of specialized AI accelerators and Tensor Processing Units (TPUs) is evidence of this growing demand. However, GPUs remain a vital tool in the AI practitioner’s toolkit. Companies like NVIDIA, AMD, and others continue to innovate, releasing GPUs tailored explicitly for AI workloads.

Intersecting thing is this now Nvidia has 4 trillion dollars of market cap in this sector

In conclusion

GPUs have evolved beyond just graphics rendering to become an essential hardware component for AI. Their parallel processing capabilities, coupled with a supportive ecosystem of libraries and frameworks, make them indispensable for AI researchers, engineers, and practitioners.